Data Language has been working with Cochrane as a technical partner for a number of years now, and we have blogged extensively on the digital transformation at Cochrane, including how linked data and an RDF based knowledge graph have become a core information backbone for their business processes.

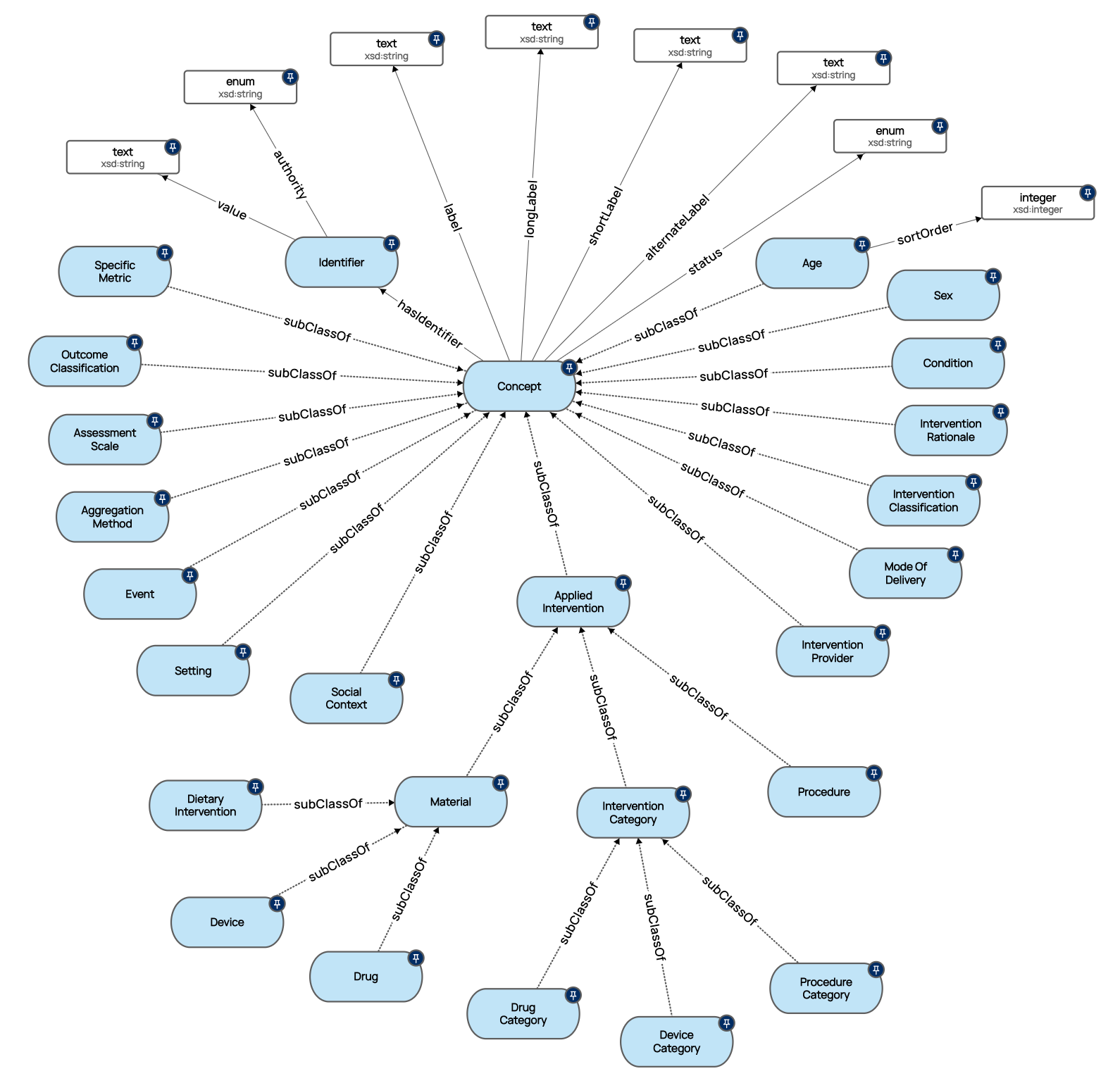

The Cochrane vocabularies of some 300k concepts provide the linked reference data that describes the PICO domain of evidence-based healthcare, describing the Populations (and their conditions, ages, sexes, social context), the Interventions (drugs, devices, procedures, materials etc), Comparisons, and the Outcomes. These concepts are annotated onto clinical evidence as PICO micrograph data structures to describe the questions being answered in clinical evidence. All of Cochrane’s vocabularies, content metadata and PICO annotations are stored in an enterprise graph database, and exposed by a cohesive set of microservice APIs that can be consumed and recombined to allow rapid innovation.

Data Language has provided the technical architecture, design and implementation of the knowledge graph, microservices, APIs, and tooling that allows the Cochrane subject matter experts to curate and QA these PICO data structures as part of their daily business processes. This linked data platform has allowed Cochrane to rapidly innovate: enhancing the Cochrane Library with linked data query capability; and build at lightning speed, the largest Covid-19 database of clinical evidence in the world, comprising more than 100k studies, described with well-structured PICO metadata, serving high volumes of requests and queries to researchers globally.

While tooling and processes for Cochrane’s SMEs to create and maintain PICO graphs describing the Cochrane systematic reviews and studies is state of the art, the tooling around the management of the reference data vocabularies is less sophisticated. The Cochane vocabularies consist of a highly curated set of linked data concepts that form a graph in their own right with class and property semantics, transitivity, and multi-parent taxonomic structures. Although Cochrane has an existing visual editor tool to perform operations on individual concepts in the reference data, it is limited in functionality, and does not allow for bulk ingest and updates, rich search and discovery operations for quality assurance, data cleansing, and other operations such as merge, migrate, and workflow.

To solve the vocabulary management problem, we have integrated our product Data Graphs into the Cochrane linked data ecosystem.

Data Graphs is a SaaS product designed to lower the barrier of entry to Knowledge Graphs. While it can be used purely as a Knowledge Graph in its own right, it can also be used as a linked data vocabulary management tool, and integrated with an enterprise graph database, or other business systems using its API and Web-hook event triggers. It has an intuitive user experience for managing linked data, and powerful search, discovery and visualisation capability, effectively making an ideal vocabulary management tool for Cochrane.

Data Graphs allows you to design or replicate any domain model or ontology, and populate it with linked data, so it was a straightforward task to replicate the existing Cochane concept model and ingest their vocabularies. The screenshot below shows the cochrane model recreated in Data Graphs.

Data Graphs provides a number of benefits to Cochrane for managing their linked data vocabularies :

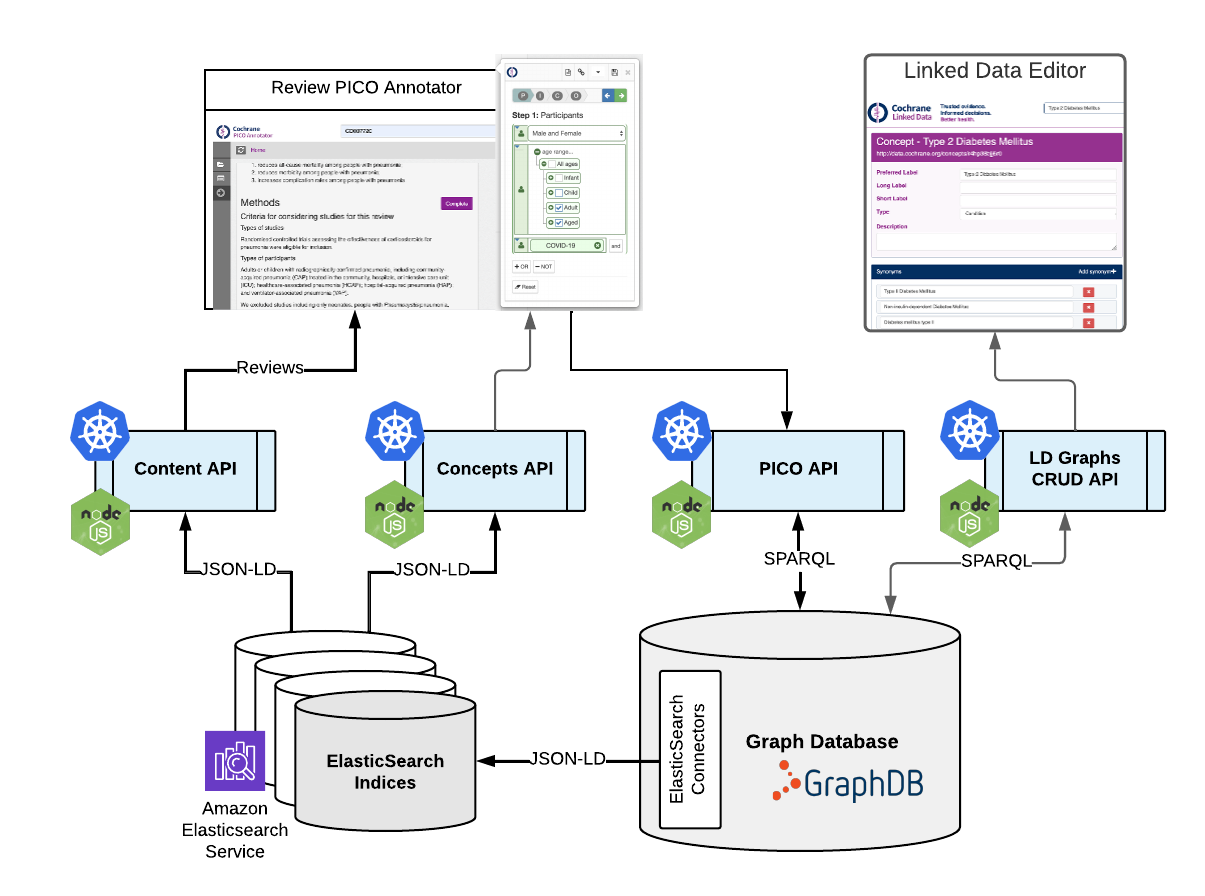

Cochrane’s linked data architecture is centred around an enterprise graph database (Ontotext’s RDF-based GraphDB), a set of microservices and online tools for annotating systematic reviews and clinical studies with creating well formed PICO graphs that describe the evidence. A slice of the logical architecture is shown below and is discussed in more detail here:

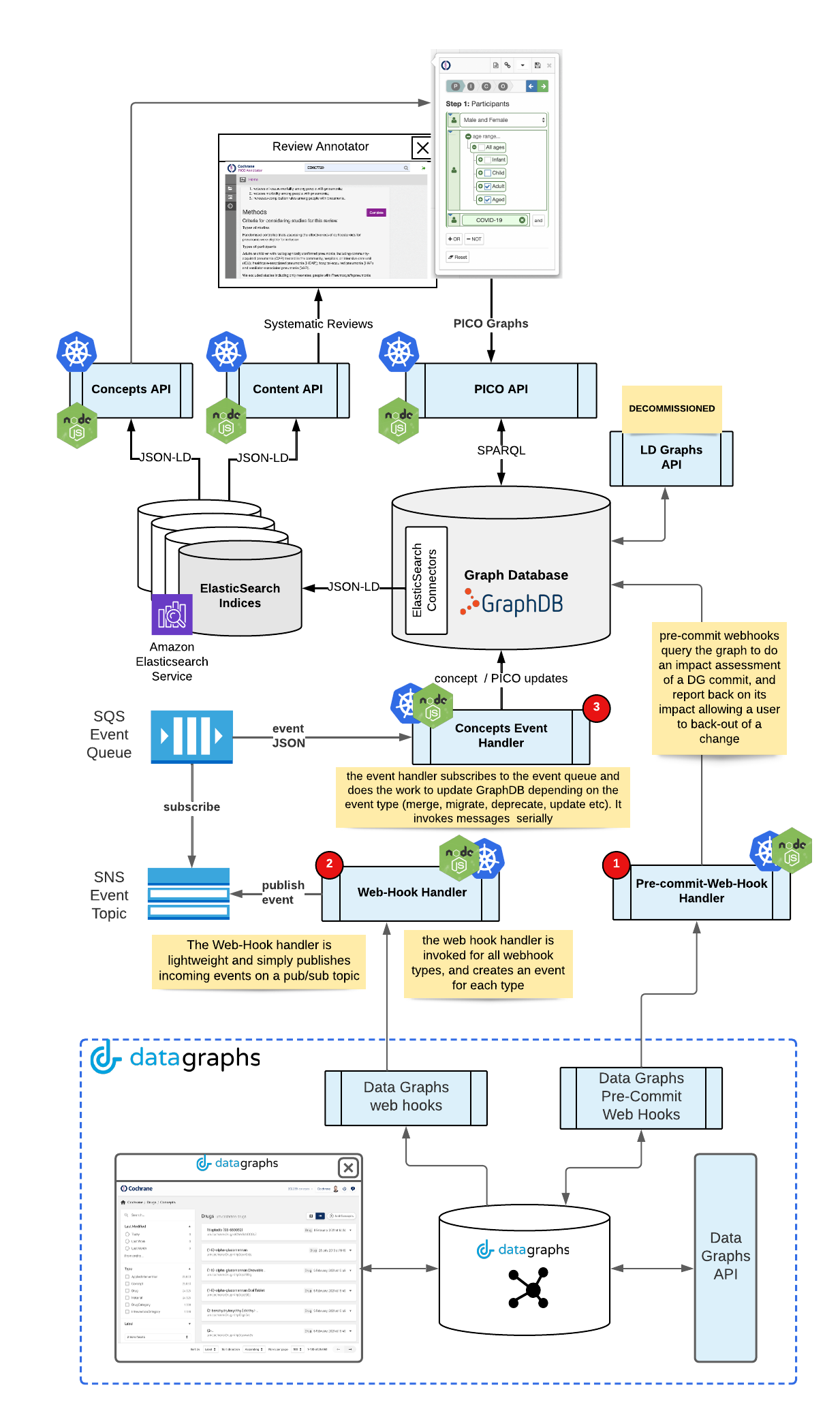

In order to integrate Data Graphs we needed an integration pattern that is robust and also allows Cochrane SME’s to understand the impact of changes they may make to the vocabularies, such that they can make an informed decision whether or not they should proceed with the updates.

For integration with an organisation’s business systems, Data Graphs comes with an expressive, contemporary REST API, serving JSON-LD payloads (pull), and a suite of webhooks that can be used for event-driven integrations (push) that can be configured for different core operations, Create, Update, Delete, Merge etc. For Cochrane’s purposes the webhooks are ideal.

Furthermore, for each web-hook, a pre-commit remote endpoint can be configured, which will be invoked before a write operation is committed in Data Graphs, on which the target business system can respond with a payload of information that Data Graphs will render to the user as an impact-assessment if the transaction is completed. This gives the user an opportunity to back out. These pre-commit hooks allows the target system to respond for a variety of scenarios :

When consuming web-hooks (essentially an HTTP endpoint or API in a target business system, that is configured and invoked on some trigger condition), we need to ensure to all reasonable endeavours that the event fired will not be lost due to unforeseen circumstances (eg target system failures, connectivity issues etc.). To achieve this, the target system endpoint needs to be as robust as possible and ideally decoupled from other downstream systems.

The best way of achieving this in the target system is to build an extremely lightweight microservice that simply receives the HTTP event payload and places it on a topic or queue. This queue can then be consumed at the target system's leisure and can cope with failure downstream (the message will not be lost if the consumer fails temporarily). The target system can take this further with dead-letter queues and retries to cope with a variety of challenging circumstances. This is the pattern adopted at Cochrane and is a pattern we would generally recommend for consuming webhooks between other products and platforms. You can see the logical architecture for this below :

The key integration points (numbered in red) within the Cochrane technical stack are :

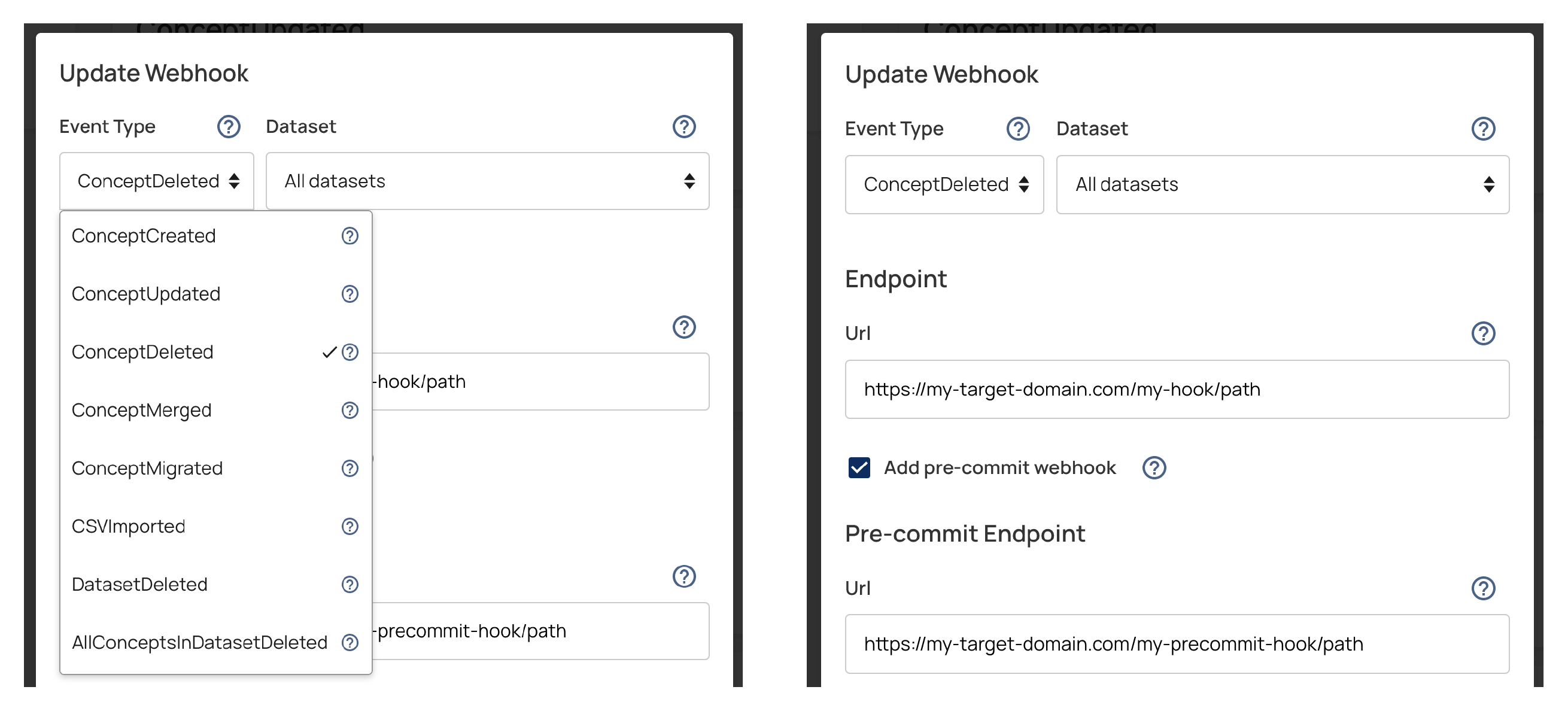

The webhooks in Data Graphs are easy to configure, as can be seen on the following screenshots.

First, choose an event type to trigger a web-hook, then configure the endpoint in the target system, an optional pre-commit hook, and any headers / security tokens that need to be sent with the request :

The final step was to load the Cochrane linked data vocabularies into Data Graphs, conforming the domain model we created earlier. This can be done in different ways

In this case, the API was the easiest approach as we could read pages of JSON-LD concepts from Cochrane’s concepts API, and pipe them into the Data Graphs write API. As the ontology model’s in both were identical, this was a relatively trivial migration script.

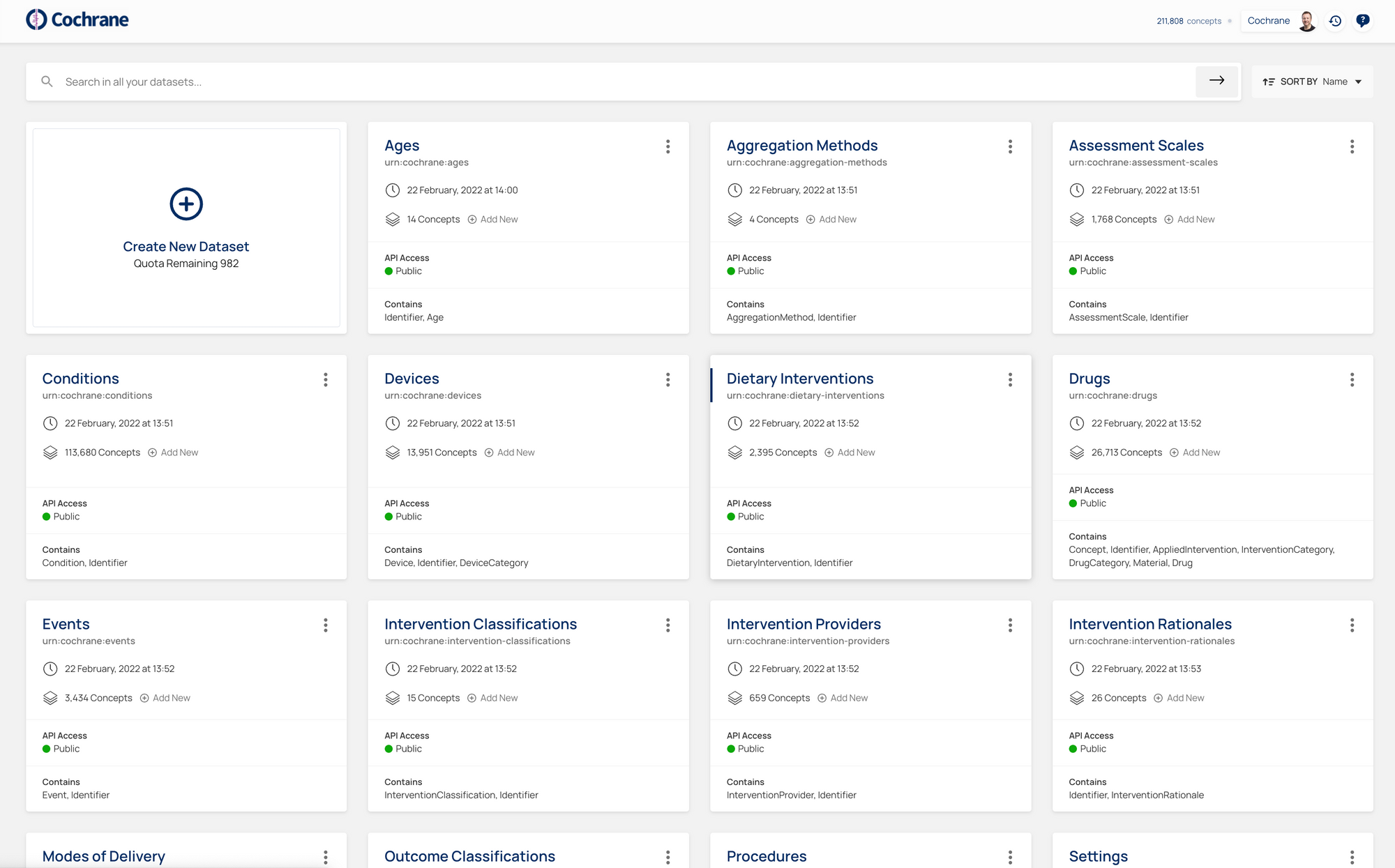

With this in place, Cochrane now has a state of the art linked data management toolkit. The fully whitelabelled user experience engenders user-ownership. Cochrane’s information experts can organise their core entities in logical datasets for rapid navigation:

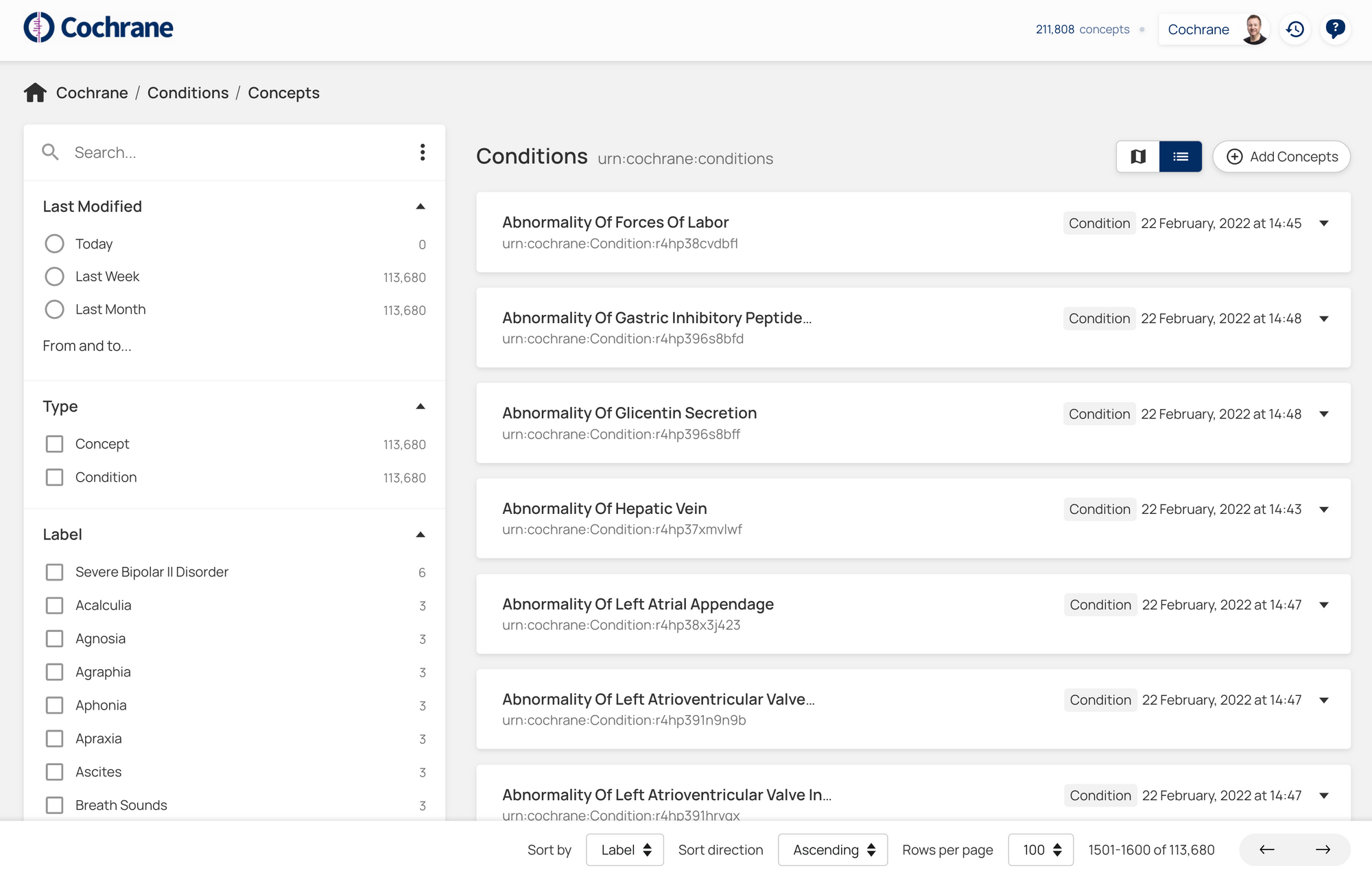

Drilling into each dataset, a SME can search for concepts, and easily find potential duplicates through a complex query or use the label/property facets :

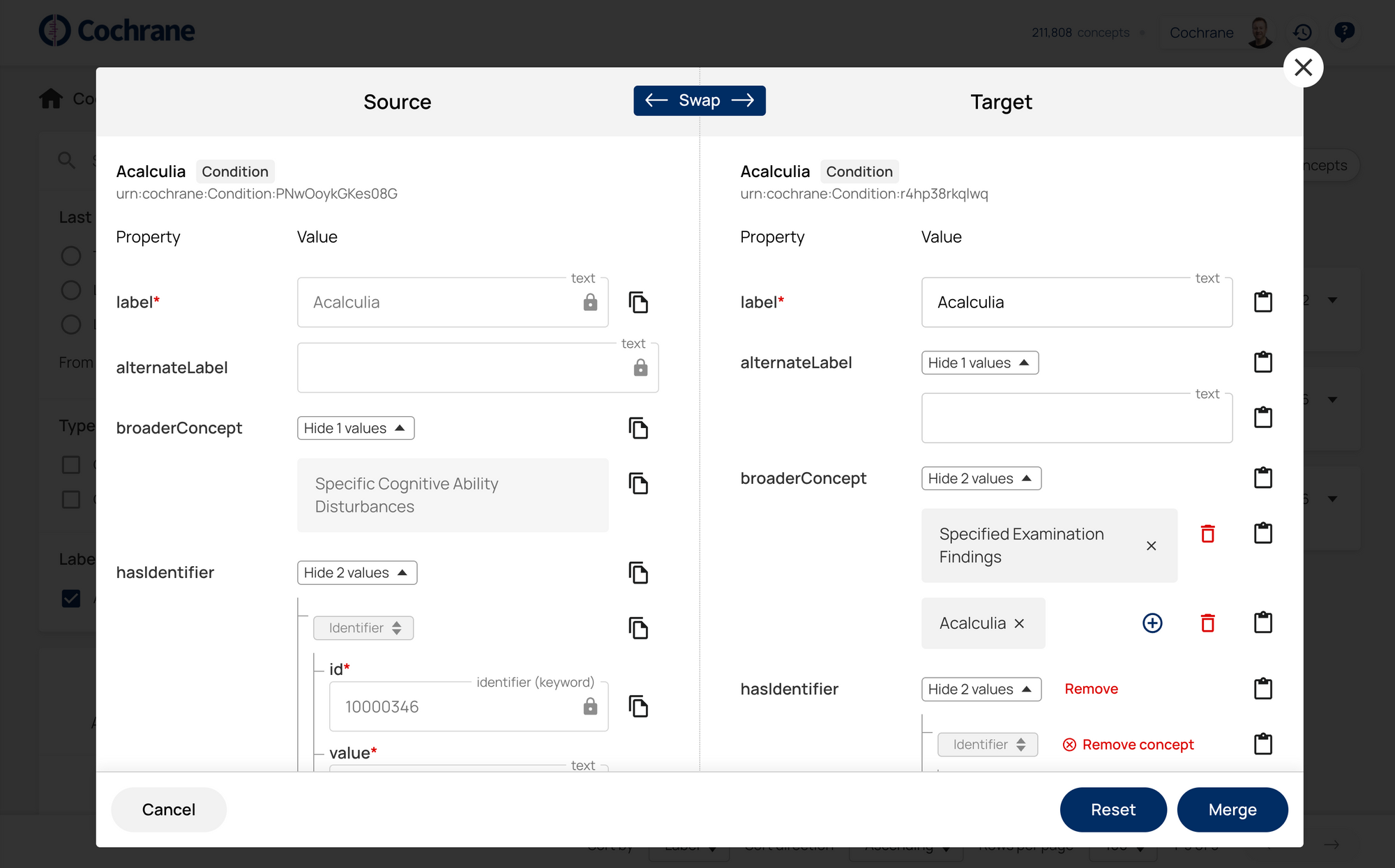

And merge those duplicates with an intuitive UI :

The resulting merge transaction will be handled by the webhook event, not just to update and merge the concepts in the Cochrane Graph Database, but also update all PICO graphs in which the source concept was previously used to reference the target concept instead. Similarly, deletes will remove the concept reference from all content and PICO annotations.

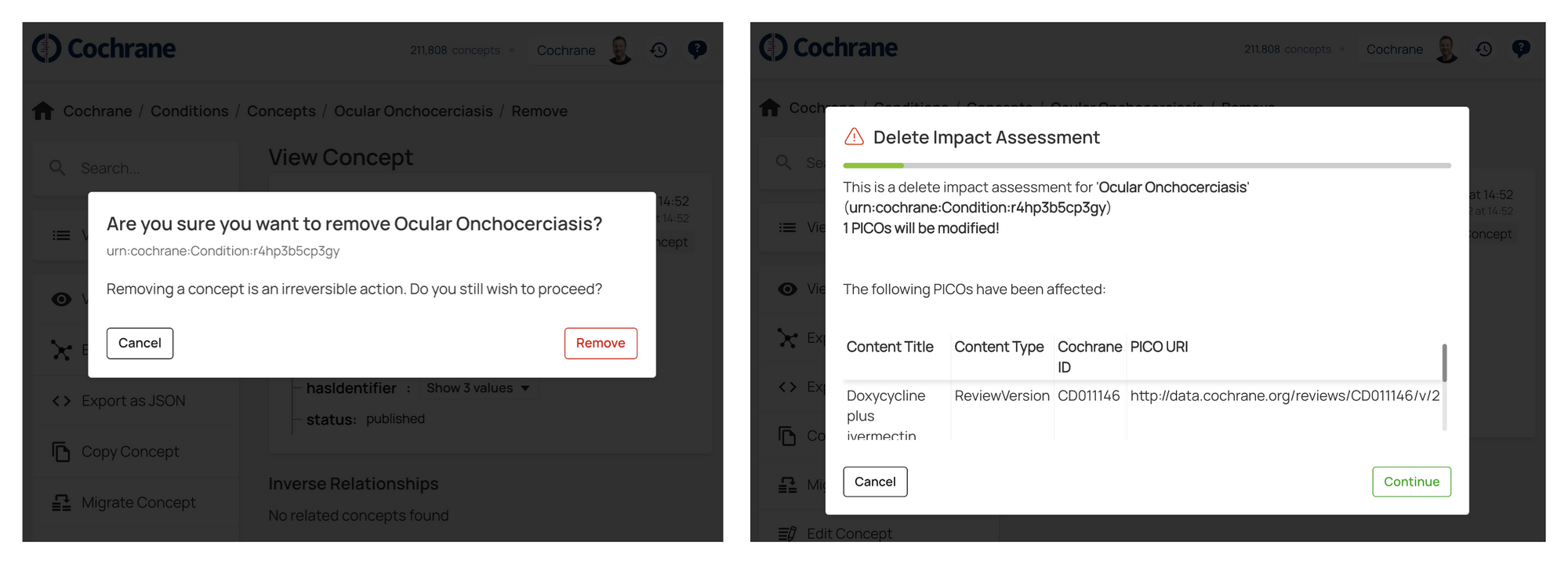

Making any changes, such as deleting a concept results in the pre-commit hooks requesting an impact assessment, so the user can make an informed decision to continue or cancel:

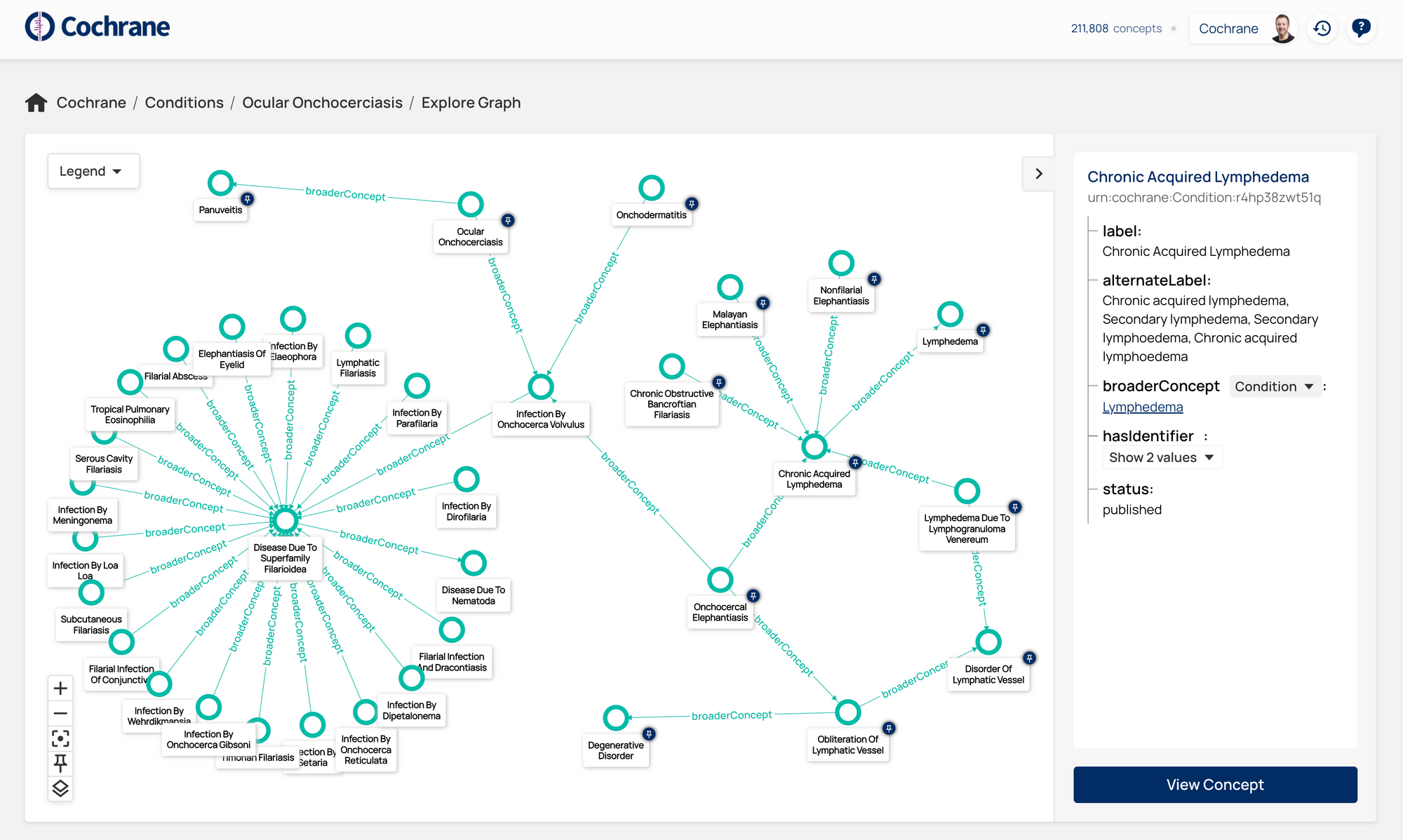

The knowledge graph can be explored visually to help find and correct anomalies in the vocabulary, such as circular relationships :

The Cochrane bioinformatics SMEs now have many more capabilities at their disposal taking their ability to deliver the next generation of evidence-based healthcare innovations to a new level.